|

Size: 4543

Comment:

|

← Revision 11 as of 2016-05-04 22:09:51 ⇥

Size: 4615

Comment: converted to 1.6 markup

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 5: | Line 5: |

| [[TableOfContents]] | <<TableOfContents>> |

| Line 54: | Line 54: |

| . [[ImageLink(uploading_files.png, width=600)]] | . [[attachment:uploading_files.png|{{attachment:uploading_files.png||width=600}}]] |

| Line 106: | Line 106: |

| . [[ImageLink(downloading_files.png, width=600)]] | . [[attachment:downloading_files.png|{{attachment:downloading_files.png||width=600}}]] |

Cleveland Clinic

The Open Knee(s) team have access to a high performance computing cluster located at the Cleveland Clinic. This infrastructure is available Cleveland Clinic employees and within the Cleveland Clinic intranet.

Compute Nodes

Specifications of cluster nodes (as of April 14, 2015) are:

Hostname. lri0[01–16]

Processors. 2 x Intel Xeon E5-2680v2

Processor Clock Speed. 2.80GHz

Cores. 2 x 10 per processor

Memory. 64GB

Memory Speed. 1666

SLURM features. rack–m08, intel, R620

Hostname. lri0[17-20]

Processors. 2 x Intel Xeon E5-2680v2

Processor Clock Speed. 2.80GHz

Cores. 2 x 10 per processor

Memory. 64GB

Memory Speed. 1866

SLURM features. rack–m08, intel, R620

Operating System. CentOS release 6.5

Usage for Open Knee(s)

Before using the cluster, one needs

- an account on Cleveland Clinic computing cluster

- an SSH client to connect to access command prompt of computing cluster

- an SFTP client to upload/download files from the computing cluster

Once an account is available, Open Knee(s) simulations can be run on this infrastructure using the following steps. erdemira is used as the user name for the examples below.

- Connect the computing cluster using SSH client:

- ssh erdemira@hpc-login

- Change directory to 'lustre' folder:

- cd lustre

- Create a new directory to keep simulation files (create folder for each simulation):

mkdir SampleSimulation

- Change directory to simulation folder

- cd !SampleSimulation/

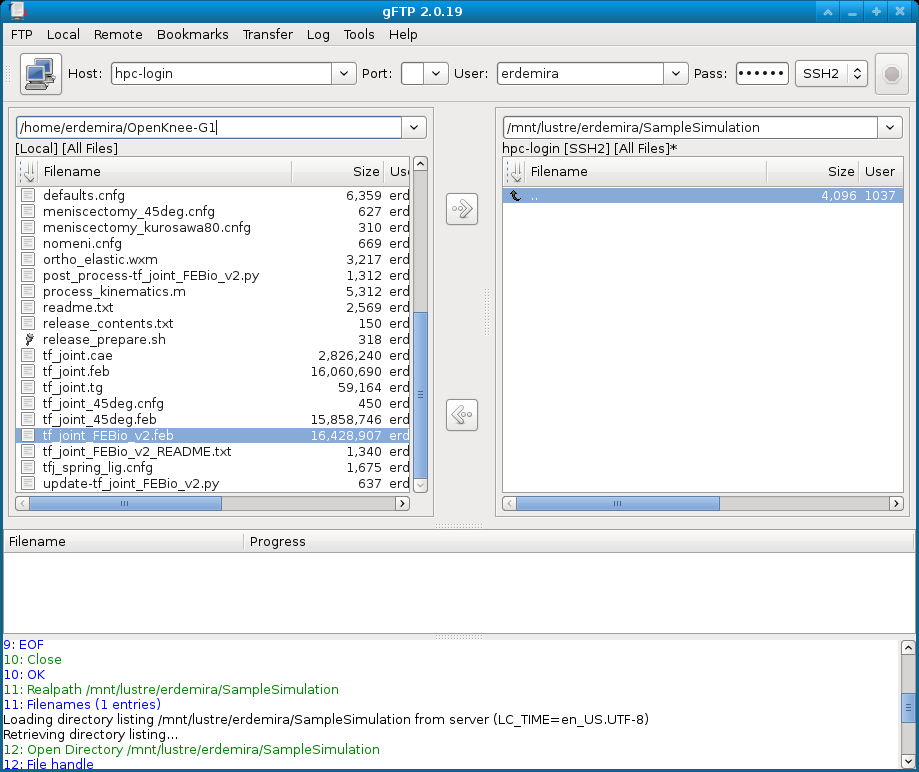

- Connect the computing cluster using SFTP client to upload model:

- Use gftp for a GUI based client, host: hpc-login username: erdemira

Change remote directory to simulation folder, e.g. SampleSimulation

Change local directory to where your model resides, e.g. /home/erdemira/!OpenKnee-G1

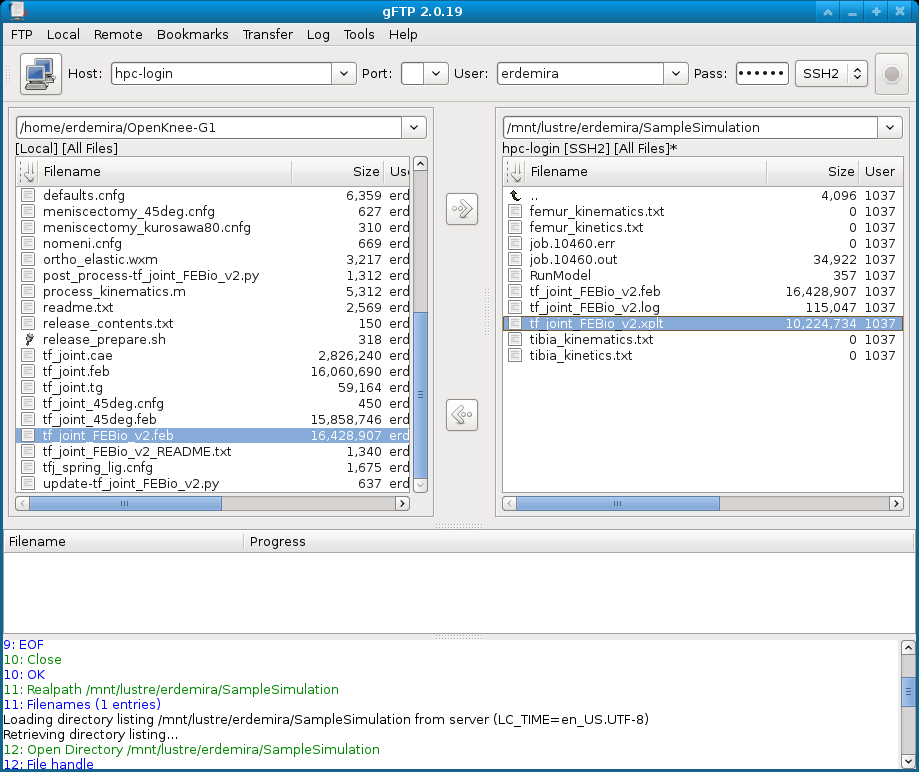

- Upload model to computing cluster, e.g. tf_joint_FEBio_v2.feb

- In the SSH client, list directory to see the model file:

- ls

tf_joint_FEBio_v2.feb

- Create a file with a text editor, e.g. Nano, to use as a batch script:

nano RunModel

- Insert variables of job submission to the file in following:

#SBATCH --mail-type=ALL #SBATCH --mail-user=erdemira@ccf.org #SBATCH --job-name=OKS-G1 #SBATCH --nodes=1 #SBATCH --ntasks=1 #SBATCH --cpus-per-task=16 #SBATCH --time=24:00:00 #SBATCH --mem=56000 #SBATCH --error=job.%J.err #SBATCH --output=job.%J.out export OMP_NUM_THREADS=16 module load FEBio/2.1.2 srun febio2.lnx64 -i tf_joint_FEBio_v2.feb

- mail-user: specify your e-mail to receive updates on job progress

- job-name: provide a title for the job

- nodes: specify number of nodes to use (FEBio only supports shared memory computing, therefore this should be set to 1)

- ntasks: number of computing tasks (FEBio only supports shared memory computing, therefore this should be set to 1)

- cpus-per-task: set number of programming threads per computing task (set to desired number of computing cores, needs to be less than or equal to computing cores available at the node)

- time: set desired time allocation to conduct the simulation hh:mm:ss

- mem: set desired memory allocation to conduct the simulation (MB)

- error: file name to output errors

- output: file name to dump screen

- The use of OMP_NUM_THREADS allows FEBio to access the specified number of programming threads

- The FEBio module should be loaded so that the system knows where the FEBio executables are

- srun starts the simulation with the input file tf_joint_FEBio_v2.feb

- Submit the simulation to the scheduler in the SSH client:

sbatch RunModel

Submitted batch job 10460

- You should receive an e-mail stating that a SLURM job has started. You will also get an e-mail when the job ends. You can exit the SSH client if you want.

- In SSH client, squeue provides a list of jobs running, e.g.

- squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

10460 defq OKS-G1 erdemira R 1:38 1 lri004